Bias-Variance Tradeoff

Bias-Variance trade-off -> Underfitting vs. Overfitting issues

Bias & Variance in ML

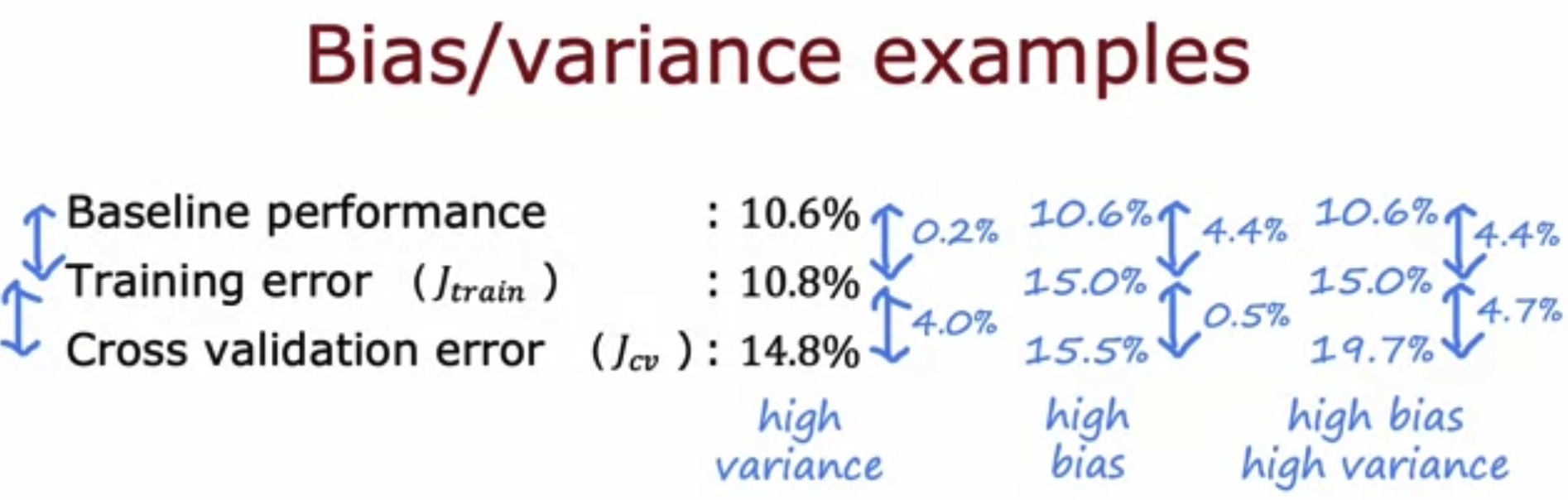

Evaluate bias & variance

You can evaluate the bias and variance by checking errors of the train and dev sets.

| Train set error | Dev set error | type |

|---|---|---|

| low (1%) | high (11%) | high variance |

| high (15%) | high, but near train set error (16%) | high bias |

| high (15%) | high, but much higher than Train set error (30%) | high bias & high variance |

| low (0.5%) | low (1%) | low bias & low variance |

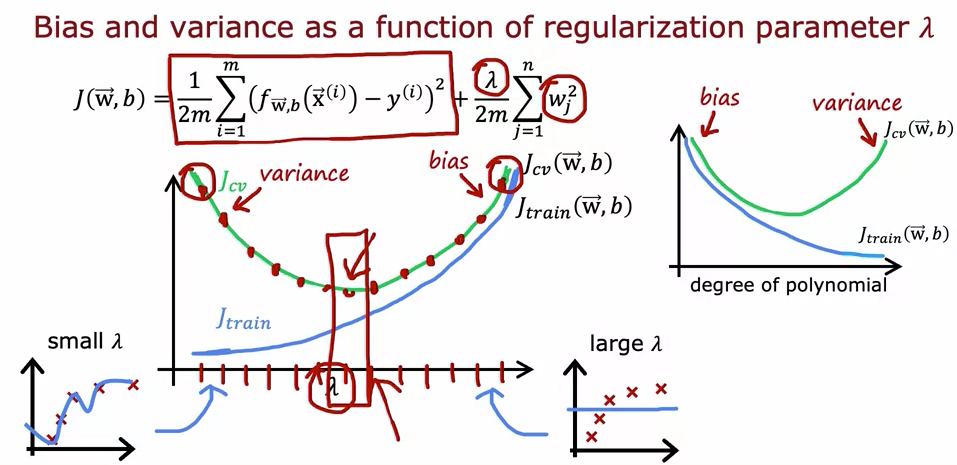

Regularization influences bias-variance trade-off

check Cost Functions#Cost function with regularization and Regularization

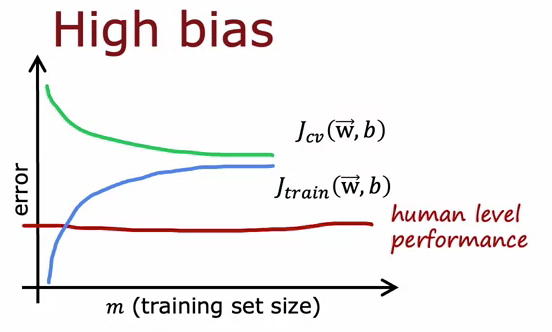

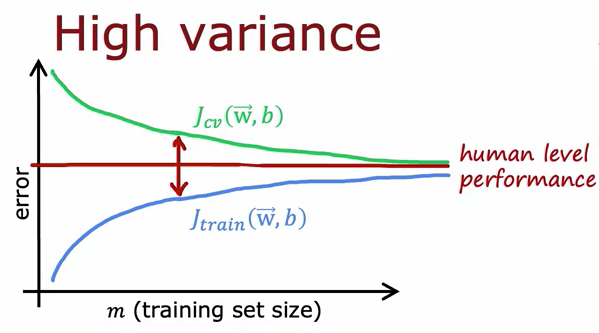

learning curves

increasing training set size can...

- lower cross validation error, if a learning algorithm suffers from high variance

- not help anything, if a learning algorithm suffers from high bias

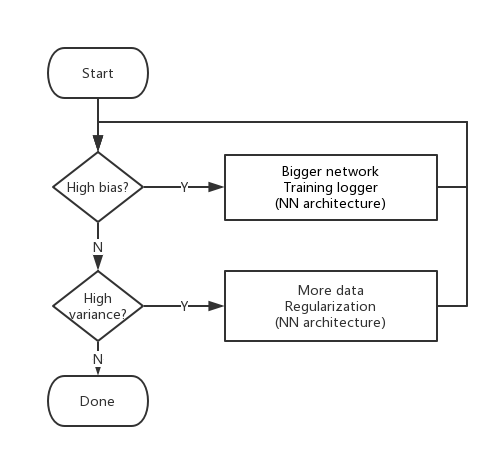

How to fix

- High bias

- try getting additional features

- try adding polynomial features

- try decreasing Regularization parameter

- High variance

- get more training examples

- try smaller sets of features

- try increasing Regularization parameter

Bias & Variance in Deep Learning

Different from simple ML models, there is rarely trade-off between bias-variance in DL models. You can now reduce bias without hurting variance, and vice versa.